Let me start by saying that none of this is groundbreaking news – in fact it makes a lot of sense if you think about it a bit.

A new Panda algorithm update has recently been announced (v4.2) and the rollout is expected to take months. Not coincidentally, lots of SEOs have recently received notifications in Search Console (formerly Webmaster Tools) that blocking CSS and Javascript files in robots.txt can cause issues.

How are these two things related? If you block page elements that are placed using CSS or JS, Googlebot won’t be able to tell that they’re there, and can read your page as being “thinner” than it really is. For example: a JS-based image slider that takes up a lot of real estate on the page, when blocked, makes the page appear rather empty (assuming there isn’t a lot of other content present). True story – I encountered that exact scenario just this week.

Now, I don’t often advocate for lots of image-only pages because they already run the risk of being “thin” and receiving the black mark from the all-powerful Google (Google is dead. Long live Google!).

Googlebot making the infamous “to index or not to index” decision when it encounters thin web pages.

Google (and others – Facebook, etc.) have the tendency to make an announcement and cause a frenzied reaction, even when that announcement isn’t particularly new. (Case in point: Facebook’s recent post about Publisher markup).

The same is the case here. I read a blog post by Chad at Seer Interactive several months ago that referenced unblocking CSS & JS. That post turned me on to an even older article by Joost De Valk that talked about the pitfalls of said robots.txt setup way back when Panda 4.0 was announced (June 2014). Then just recently I discovered an even earlier post on Search Engine Land where Vanessa Fox wrote about unblocking JS & CSS (although there was no reference to a Panda penalty, so not quite as relevant.)

Is My Site Affected?

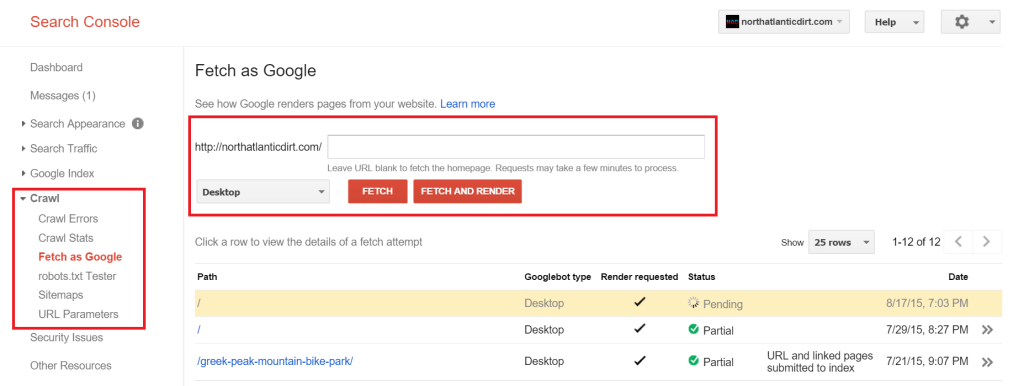

Long story short, if you suspect you might be suffering from a Panda penalty and that blocking JS and CSS is the reason, go into Webmaster Tools (sorry, Search Console!), pick the page of your choice and enter the URL into the Fetch & Render box found under the “Crawl” tab.

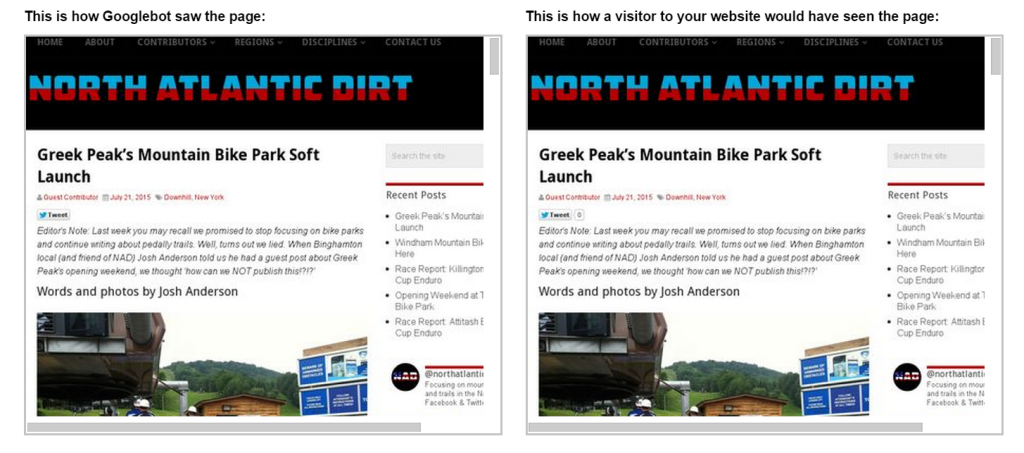

Next, click on the URL you chose fetch and render from the section below and compare what Googlebot sees to what a visitor sees.

In this example, the GoogleBot render is the same as the way a visitor sees the page. If a particular resource was blocked, there might be a big empty space somewhere. As noted previously, JS image sliders are a common example of a resource that might be blocked.

You can spot check different pages to see how they look. It gives a great understanding of what elements are blocked and how it affects the overall makeup of a page (especially if a blocked element is present in a page template widely used throughout the site.)