Two articles were published this week that address the issue of rising bicycle costs . The first, by Bicycle Retailer and Industry News (BRAIN) strictly references statistics on rising prices in the cycling industry. The second, by Matt Wragg of Pinkbike, suggests that getting rid of model years could encourage riders to buy more expensive bicycles by prolonging their obsolescence (the bicycles, not the customers). While these articles make some good points, I have some suggestions of my own.

Santa Cruz is a wildly successful cycling brand that illustrates my argument. Here’s why they do so well:

1. They make good bikes that ride well, look nice and last a long time.

2. They only make mountain bikes, which allows for more consistent branding.

3. They keep models, mostly unchanged, for several years.

4. They support discontinued models with readily available replacement parts.

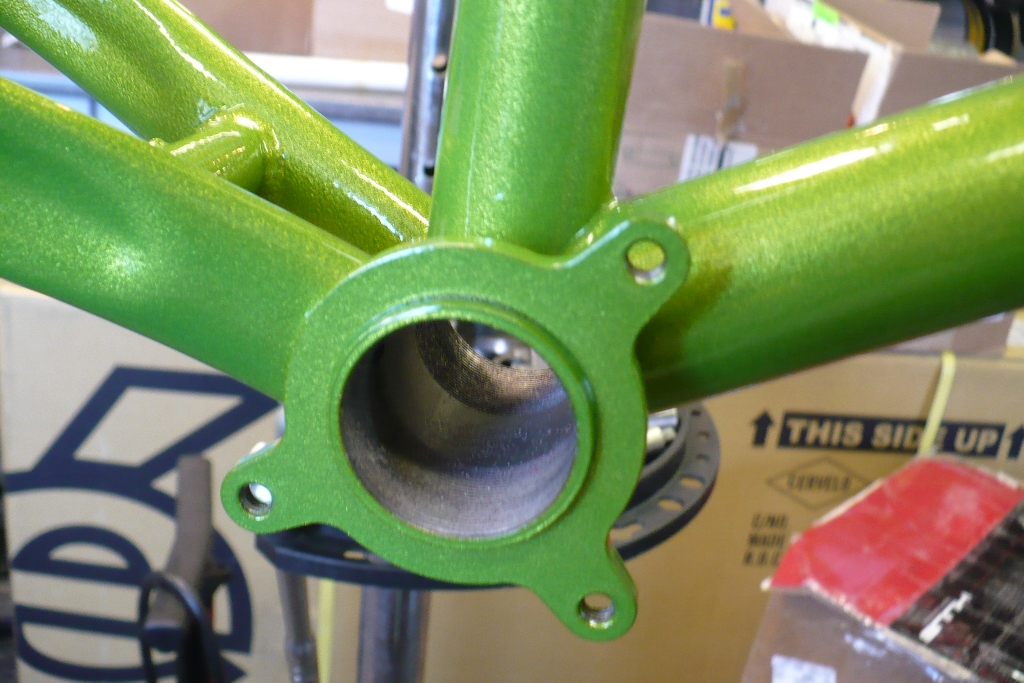

Consequently, they have an excellent brand reputation. Let’s focus on that third bullet point – how long they keep models and how they update them. Despite the fact that it was recently discontinued, the Blur LT illustrates this point well. They introduced the Blur in 2003 and kept it mostly unchanged until a major redesign in 2008. Version 2.0 carried on into 2013 with minor updates like a tapered headtube, ISCG tabs and 142mm dropouts – but the overall platform remained mostly unchanged. That bike was discontinued and replaced by the Bronson, a 150mm, 27.5″ wheeled trail bike which for all intents and purposes is a third generation Blur LT.

The Blur is just one example, but look at other bikes and you’ll see Santa Cruz keeps its models for about four years. Perhaps more importantly, they support those models long after their extinction. Some criticize them for being late to the game when new standards and trends emerge. Indeed, it took some time to add 142mm dropouts to their line up even after their competition had embraced the standard.

What Santa Cruz is doing is building brand equity. When a bike carries on through multiple years unchanged it demonstrates stability and shows potential customers that they stand behind their designs. It reinforces the image that they make great bikes and reaffirms purchase decisions from current owners. It also gives credibility to their brand and helps customers build an emotional bond with their bikes.

That might sound ridiculous but consider this: riders want to love their bikes. They create precious memories on their bikes and every time they look at them, they’re reminded of those events. That being said, it’s hard to develop any attachment when their bike has been completely redesigned less than a year later. Combine that with an industry tendency to bash previous products in an effort to sell new ones and you leave customers feeling dejected and maybe even remorseful.

The last thing a company wants is for customers to feel remorseful about purchasing their products. The marketing plan often seems to be “look, we’ve made everything so much better that you should run out and buy the new one immediately!” and therein lies the problem that Mr. Wragg mentions – most consumers don’t have the financial means to buy a new $5,000 bike every year. And that’s okay! They don’t have to and we shouldn’t expect them to! Bike companies should focus on building brand equity, so that when those customers DO buy another bike, they buy it from them. Build a culture around products and encourage customers to do the same.

Not convinced? Let’s play off Matt Wragg’s line of thinking and make an analogy to the auto industry, more specifically the Honda Civic. Honda is one of the largest auto manufacturers and the Civic is one of their best selling models, first released in 1972. In 42 years, the Civic has been seen nine different generations. Some quick math reveals an average model life of 4.6 years. Honda prides itself on reliable cars that inspire customer loyalty. This strategy has paid off, as Honda ranks third nationally in customer loyalty.

The statistics referenced by BRAIN are worthy of concern. As bike costs rise and the average household income remains the same, companies need to adjust their thinking if they want to continue to be successful.

Sources

Wragg, M. (2014, February 28). Pinkbike poll: Should mountain bike companies consider ditching model years?. Retrieved from http://www.pinkbike.com/news/pinkbike-poll-should-mountain-bike-companies-consider-ditching-model-years-2014.html

Report available on ‘turbulent’ 2013 us bike market. (2014, February 24). Retrieved from http://www.bicycleretailer.com/studies-reports/2014/02/24/report-available-turbulent-2013-us-bike-market

Porsche, cadillac lead automotive brand loyalty improvements in first quarter, according to polk. (2013, June 5). Retrieved from https://www.polk.com/company/news/porsche_cadillac_lead_automotive_brand_loyalty_improvements_in_first_qtr

Honda civic history. (n.d.). Retrieved from http://www.edmunds.com/honda/civic/history.html